Each and everyone of us is our own little ChatGPT: The things we learn, read, and discuss are how we train our own mental models. What ChatGPT can do is house more information in one place, in a state that’s ready to use, than ever before – and with that comes a risk to our own mental models. To our internal AIs.

Students (and I’m Canadian, so there’s probably some differences in how students in other places learn), get some basic media literacy skills in high school. After all, there’s a civics class where students learn about how the government works. We have history classes that give us context about the society we live in. All of this is guided learning.

Fullintel held a webinar with IABC’s Maritime Chapter diving into what ChatGPT is, and how PR professionals can use it. The core discussion eventually developed into: What does this mean for how we learn and consume information?

ChatGPT can be an enormously powerful tool for research and self-guided learning as long as students, and all users, still do their homework. That means training our mental models with facts, taking deeper dives into the subjects we research on ChatGPT, and doing some actual reading.

People are Learning Machines

We learn all the time. It’s what we do, we’re information processors that also happen to walk, talk, and procreate. And just like these new AI tools, we’re constantly learning. From there we synthesize what we learn and organize it in our heads. The whole experience is subconscious – except when we do it intentionally by setting forth to learn something specific. That means doing research.

The one trick that students must learn in the age of AI is to read more, to find credible sources, experts, journals, and to understand where the cutting edge is (and where misinformation lives, too).

Without research and reading in more depth, we may become reliant on ChatGPT or similar services for doing our basic thinking. There’s a danger that we’ll actually retrain our brains to learn less and repeat more.

Risks of Associative Memory

Being spoon-fed detailed answers to critical questions will change how our brains work, and change our online information gathering habits. Associative memory is a thing. When you ask someone who knows a lot of stuff and they give you an answer, there’s a good chance you might return to that social connection to dive in more deeply.

Your memory and ability to recall that information becomes associated with the person who gave you that intel. You might not even remember what they initially told you, because it is the other person who did the hard work to ingrain that information in the first place.

Schools Need to Play Catch Up

Getting ahead of this trend means that schools need to understand and use ChatGPT, but also train students to gather valuable information without the use of such a tool. There are three areas I think schools should invest more time:

1. Research Fundamentals: How to find information, where to find it, and how to source it. We do a little bit of this in high school – or at least we used to in Canada. Doing more of this, and doing it with data as opposed to sourcing tidbits of text for an essay, is required. If students learn these processes well, they’ll be able to better decipher what and how a generative AI would come up with its responses.

It will also make students better prompters when using AI tools.

2. Media Literacy: Some school systems still do an OK job of this. Teaching students to look for credible sources of information like legitimate news websites – as opposed to, say, a TikTok channel. Sure, the information is accessible on TikTok or other social channels. And depending on the source, it could even be credible. But just like with ChatGPT, if the information is ladled to the user’s mind from a specific source, the only thing the students learn is how to copy-and-paste facts learned elsewhere. We have to teach students to identify trustworthy influencers.

AIs themselves – especially with the advent of autonomous AI systems such as AutoGPT – will one day be running around on the internet sharing information on their own. Deciphering what’s real and what’s not is going to get harder and harder.

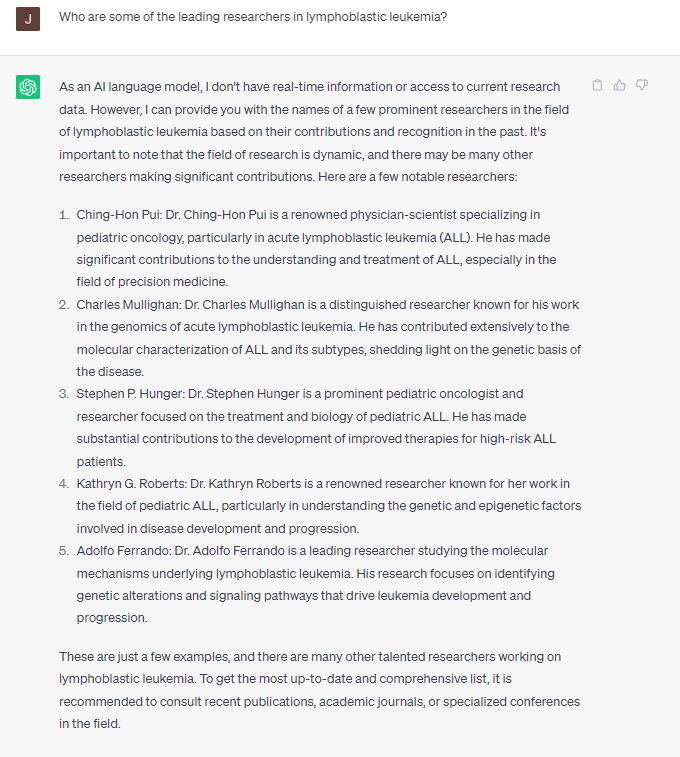

Fullintel asked ChatGPT to identify leading experts in a specific type of cancer, it provided a great list with rationale for why they were included. Click to see its response.

3. Data validation and Scientific Literacy: Single data points on their own are like opinions: Everyone’s got them, and you know what people say about that. Cherry picking a data point here from some study there, and rattling it off like a certified fact, doesn’t really cut it. Understanding the nature of the issue, reviewing meta-analyses and consulting with experts is the only way to be certain you are interpreting data correctly.

ChatGPT might actually benefit students in this manner, asking prompts like “Who are the leading researchers in lymphoblastic leukemia?” This kind of educated prompting can generate detailed responses that provide breadcrumbs on influencers or experts who should be listened to.

Expanding that into datasets, and synthesizing a variety of expert conclusions, is a complicated task. Asking ChatGPT for a complicated and nuanced answer comes with a lot of caveats that ChatGPT itself exposes to users. At least it does today,

As a society, changing how people learn can change the language we use as a culture. For example, it only took a few years for us to become habituated to saying we “Google” things. That ended the phone book as we know it, and with it the focus on local businesses and contacts. I don’t know what generative AI is going to do to learning as an action, but there’s a phone book being killed somewhere.

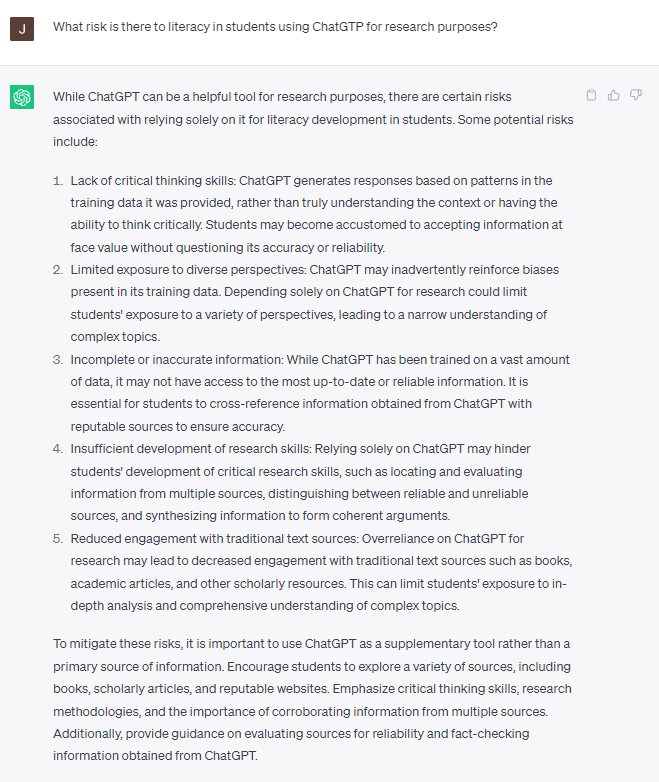

PS. We did ask what ChatGPT thought about this, and its answer was way better than I expected. I’m happy it agrees with my analysis.